by Philip Merrill - July 2018

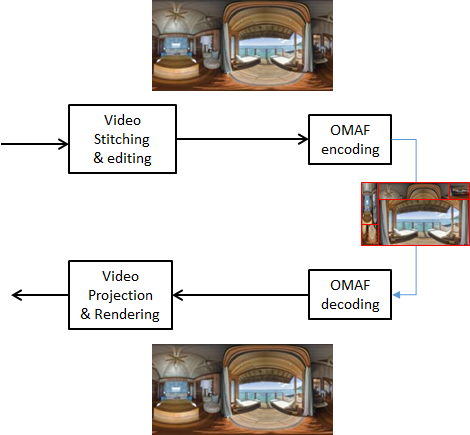

Immersive media is a broad challenge MPEG-I has taken on and Omnidirectional MediA Format (OMAF) supports its first round of requirements. Getting past the burden of large file sizes for more effective exchange of virtual reality content is a needed development that OMAF has been created to accelerate. The moving picture takes on a new reality presented in 360-degree omnidirectional formats. As virtual reality has become more common through immersive headsets, consoles, mobile phones and more, the need for reliable digital formats has taken on fresh urgency. Through MPEG-I and OMAF, in particular, digital packaging considerations and requirements have been resolved with care for signaling issues, interoperability and suitably narrow scope. For example, the ISO Base Media File Format (ISO BMFF) has been extended to support a set of base VR features so that formatting in files or segments for streaming is supported — through backward compatible mechanisms for encapsulation of omnidirectional media data and signaling of related metadata — for enabling optimized delivery and rendering.

It is not just the emerging VR industry that desires efficient means of immersive media content storage, distribution and rendering. This is a concern of everyone working in the immersive media creative space because integrating the captured media elements together can feel like a big-data assignment. For video, audio and timed text, OMAF posits a sphere with three degrees of freedom, within which media data units are assigned their places with descriptive support relevant to helping rendering engines make automated decisions. In addition to addressing VR-specific concerns, compatibility is built in with MPEG's CMAF, DASH and MMT, ensuring coverage for varied profiles and use cases, such as on-the-fly personal advertising in live streaming.

Curvature is an essential property of the 360-degree 3D coordinate space within the geometric sphere of viewing possibilities. Picture(s) will be consumed from a viewpoint facing out from the intersection of the xyz axes, i.e., the center of the sphere, needing a corresponding and appropriate viewport to be displayed. Audio media data either in AAC or 3D Audio have inherently immersive properties, but the HEVC, AVC, or JPEG visual assets are delivered in 2D format. Metadata instructions inform the rendering player or engine, but the rendering process itself is outside of the scope of the OMAF standard although its features were designed to provide the renderer with what is needed — the immersive environment. While OMAF supports complex player and rendering functions, it does so by delivering the media data along with suitable metadata.

OMAFs coordinate system provides three degrees of rotational freedom in a conventional xyz and supplies means of specifying viewport areas inside the sphere's surface, looking out. Timed text or captions delivered through metadata require their own viewport-like spatial assignment(s) and can be set to remain at a fixed location within the sphere or to move with the user's viewpoint so that timed text remains stable, visually, despite head motions.

A viewport-independent profile is in place for HEVC while there are two HEVC and AVC profiles that support viewport-dependent omnidirectional video processing (encoding, delivery, decoding, and rendering). The curvature inherent in omnidirectional visuality must be developed onto a planar surface, projected onto 2D for coding, and its reassembly in the right place within the coordinate system is a later step after decoding. One advantage of versatile metadata is how it enables the client to quickly switch between representations so that delivery, decoding and rendering can be most efficient.

The metadata signals the player, which is doing the rendering, that video media data is alternatively equirectangular projections (like world maps), a cubic format flattening the picture onto a six-sided die shape, or a fisheye lens which is remarkably efficient for capture. Like the others, fisheye images are projections of what is meant to be the view from inside the sphere, but unlike the others, it only requires two fisheye lenses to capture 360-degree video.

The viewport-dependent video profiles support additional packing for regions. In addition to delivering multiple 2D video media data, uniquely omnidirectional features are offered such as providing guard margin for more seamless stitching at image transitions. Since the point of view of the user has visual limits, high levels of detail do not need to be rendered for all 360-degrees of media data.

Whether streaming or stored, alternative viewport representations with varying granularity of detail can be readily available and switchable, for example one representation for looking at a mountain and another representation for looking at a valley, with each in the peripheral side-view if looking at the other. Richer low-priority, low-quality representations are not immediately needed but they must be available at the turn of a head.

A wide variety of delivery alternatives have been made possible, thanks to the storage and delivery encapsulation mechanisms and also the existing efficiencies in AVC and HEVC. These are further leveraged by enabling the 2D frame to be packed with assigned regions so that more granular media data is delivered for priority areas, while lower-priority regions can be reduced to a smaller 2D area in the frame during coding.

As the first-out VR standard of its kind, OMAF offers a range of rendering that simplifies many basics to satisfy MPEG-I's initial interoperability requirements. Variations and special efficiencies in OMAF serve industry needs and have been introduced by MPEG participants to meet them. Profiles for new conformance points can extend the whole system at a later stage. But the benefits of interoperability are rapid as far as making content more readily available.

While there is more ahead for MPEG-I and OMAF, the feature-set for these initial OMAF profiles are meant to produce substantial help in the short term. This is true both because OMAF and the media codecs supported therein manage and compress 360-degree data well but also because interoperability generally allows distribution platforms to provide their users with a richer selection of content from a wider assortment of media creators. Like the immersive worlds themselves, the rest of the MPEG-I story remains to be explored.

Special thanks to Ye-Kui Wang, who contributed to this report.