MPEG-V Sensory Information

MPEG doc#: N10995

Date: October 2009

Author: Christian Timmerer

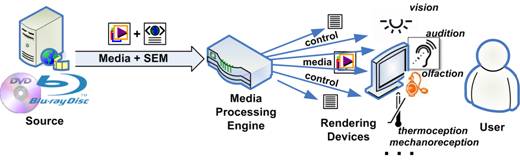

MPEG-V Part 3 Sensory Information specifies the Sensory Effect Description Language (SEDL) [1] as an XML Schema-based language which enables one to describe so-called sensory effects such as light, wind, fog, vibration, etc. that trigger human senses. The actual sensory effects are not part of SEDL but defined within the Sensory Effect Vocabulary (SEV) for extensibility and flexibility allowing each application domain to define its own sensory effects. A description conforming to SEDL is referred to as Sensory Effect Metadata (SEM) and may be associated to any kind of multimedia content (e.g., movies, music, Web sites, games). The SEM is used to steer sensory devices like fans, vibration chairs, lamps, etc. via an appropriate mediation device in order to increase the experience of the user. That is, in addition to the audio-visual content of, e.g., a movie, the user will also perceive other effects such as the ones described above, giving her/him the sensation of being part of the particular media which shall result in a worthwhile, informative user experience. The concept of receiving sensory effects in addition to audio/visual content is depicted in Figure 1.

Figure 1. Concept of MPEG-V Sensory Effect Description Language [2].

The media and the corresponding SEM may be obtained from a Digital Versatile Disc (DVD), Blu-ray Disc (BD), or any kind of online service (i.e., download/play or streaming). The media processing engine – sometimes also referred to as RoSE Engine – acts as the mediation device and is responsible for playing the actual media resource and accompanied sensory effects in a synchronized way based on the user’s setup in terms of both media and sensory effect rendering. Therefore, the media processing engine may adapt both the media resource and the SEM according to the capabilities of the various rendering devices.

The Sensory Effect Vocabulary (SEV) defines a clear set of actual sensory effects to be used with the Sensory Effect Description Language (SEDL) in an extensible and flexible way. That is, it can be easily extended with new effects or by derivation of existing effects thanks to the extensibility feature of XML Schema. Furthermore, the effects are defined in a way to abstract from the authors intention and be independent from the end user’s device setting as depicted in Figure 2.

Figure 2. Mapping of Author’s Intentions to Sensory Effect Metadata and Sensory Device Capabilities [1].

The sensory effect metadata elements or data types are mapped to commands that control sensory devices based on their capabilities. This mapping is usually provided by the RoSE engine and deliberately not defined in this standard, i.e., it is left open for industry competition. It is important to note that there is not necessarily a one-to-one mapping between elements or data types of the sensory effect metadata and sensory device capabilities. For example, the effect of hot/cold wind may be rendered on a single device with two capabilities, i.e., a heater/air conditioner and a fan/ventilator.

Currently, the standard defines the following effects [1]:

- Light, colored light, flash light;

- Temperature;

- Wind;

- Vibration;

- Sprayer;

- Scent;

- Fog;

- Color correction;

- Motion;

- Kinesthetic

- Tactile.

References

[1] C. Timmerer, S. Hasegawa, S.-K. Kim (eds.) “Working Draft of ISO/IEC 23005 Sensory Information,” ISO/IEC JTC 1/SC 29/WG 11/N10618, Maui, USA, April 2009.

[2] M. Waltl, C. Timmerer, H. Hellwagner, “A Test-Bed for Quality of Multimedia Experience Evaluation of Sensory Effects”, Proceedings of the First International Workshop on Quality of Multimedia Experience (QoMEX 2009), San Diego, USA, July, 2009.