MPEG-4 Scene Description and Application Engine

MPEG doc#: N7608

October 2005, Nice

Authors: Alexandre Cotarmanac'h and Renaud Cazoulat (France Telecom), Yuval Fisher (Envivio)

Introduction

BIFS[1] is the MPEG-4's scene description language, designed for representing, delivering and rendering interactive and streamable rich-media services (including audio, video, 2D & 3D graphics).

Background

The BIFS specification has been designed to allow for the efficient representation of dynamic and interactive presentations, comprising 2D & 3D[2] graphics, images, text and audiovisual material. The representation of such a presentation includes the description of the spatial and temporal organization of the different scene components as well as user-interaction and animations.

The main features of MPEG-4 BIFS are the following:

Seamless embedding of audio/video content. MPEG-4 BIFS allows integration and control of different audio/video objects seamlessly in a scene.

Rich set of 2D/3D graphical constructs: MPEG-4 BIFS provides a rich set of graphical constructs which enable 2D and 3D graphics. BIFS also provides tools that enable easy authoring of complex Face and Body Animation, tools for 3D mesh encoding, and representation of 2D and 3D natural and synthetic sound models.

Local and Remote Interactivity: BIFS defines elements that can interact with the client-side scene as well as with remote servers. Interactive elements allow for text input, mouse events, and other input devices that can trigger a variety of behaviors.

Local and Remote Animations: Scene properties, such as object positions, colors, and even shapes, etc., can be animated using either predefined scene descriptions or via streams sent from a server.

Reuse of Content: MPEG-4 scenes can contain references to streamed sub-scenes. That means that content can easily be reused, a powerful way to create a very rich user experience from relatively simple building blocks.

Scripted Behavior: MPEG-4 scenes can have two types of scripted behavior. A Java API can control and manipulate the scene graph, as well as built-in ECMA script (javascript) support that can be used to create complex behaviors, animations, and interactivity.

Streamable scene-description: the spatial and temporal graphic layout is carried in a BIFS-Command stream. Such a stream operates on the scene-graph through commands which replace, delete and insert elements in the scene-graph. .

Accurate synchronization. Audio/visual content can be tightly synchronized with other A/V content, client-side, and server-driven scene animation, thanks to the underlying MPEG-4 Systems layer.

Compression: the scene description is binarized and compressed in an efficient way.

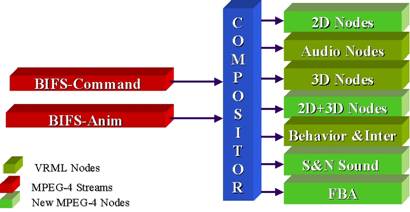

The diagram below summarizes the different components of BIFS.

Figure 1 MPEG-4 Scene Description : Commands & ANIM streams used to for scene description and animation. The compositor can render a variety of node types, from 2D to face & body animation.

BIFS Design

MPEG-4 Systems follows an object-oriented and a stream-based design. All presentations are described in a scene-graph, which is a hierarchical representation of audio, video and graphical objects, each represented by a (BIFS) node abstracting the interfaces to those objects. This allows manipulation of an object’s properties, independent of the object media. For example, a scene can define mechanisms to scale and animate the position of an image object, while the actual image data – a property of the object - is defined dynamically at connection time.

The benefits of such a design are many. First, it makes authoring easier. The scene-graph structure allows high level description of the presentation and makes the coding of media independent (video, images, audio, etc…). Second, it allows for extending or conversely sub-setting the elements needed for applications in a particular market. Consequently a terminal may only understand a subset of BIFS nodes. Subsets of available nodes are called profiles. For instance, it is possible to use 2D-only profiles. Third, it gives a well-defined framework for building up interaction between elements and dealing with user-input. In effect, user input is also abstracted as a BIFS node, e.g. TouchSensor and InputSensor which can be connected to other nodes in order to express complex behaviors, such as for instance, when the user clicks on this button, stop the video, etc…

In MPEG-4, every object is tightly coupled with a stream: such binding is made by the means of the Object Descriptor Framework which links an object to an actual stream. This design seems obvious for video objects that rely on a compressed video stream. It has been pushed a bit further: the scene description and the description of object descriptors are themselves streams. In other words, the presentation itself is a stream which updates the scene graph and relies on a dynamic set of descriptors, which allow referencing the actual media streams.

These design principles can be summarized in the following figure, which gives a visualization of a scene.

Figure 2 An MPEG-4 scene aggregating a set of different media streams

Target applications

MPEG-4 BIFS is particularly suited for some applications and is currently used in several market sectors. Below we list a subset of BIFS features and the types of products that these features enable well. Table 1 lists applications and their characteristics. The figures below give an overview of content made possible through BIFS

|

Application |

Size/Bandwidth |

Profile |

|

Tiny 2D animation (MMS) |

1 KB |

Simple2D |

|

Subtitles |

~1 kb/s |

Core2D |

|

Karaoke |

~3kb/s |

Main2D |

|

Interactive Multimedia Portals |

100 KB / ~20 kb/s |

Advanced2D |

|

3D cartoon |

150 KB / ~20kb/s |

3D profile |

|

2D games |

100-500KB |

Advanced 2D |

Table 1. Application types, their size and the corresponding MPEG-4 Systems profile.

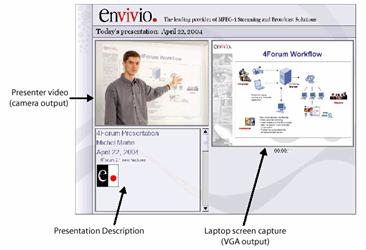

Feature: Integration and Synchronization of Multiple Streams

Corporate Presentation/Education broadcasts benefit from the ability to integrate multiple synchronized streams in an interactive presentation that includes zooming, picture-in-picture, broadcast (in the live case), chapter marks for random access and trick play (in the on-demand case).

Feature: Server Push of Scene Updates

In client-side middleware, such as the kind used for IPTV deployments, the ability to modify a client-side scene from the server is useful for sending meta data (Electronic Program Guide, Video on Demand library, etc.) and updating services (e.g., adding personalized pages).

Figure 3 Origami Mobile Portal (France Telecom) with EPG, Online Weather, Video and Games

Feature: Rich Scene Description

MPEG-4 BIFS’s ability to represent complex scenes can be used for applications ranging from e-commerce to entertainment.

Figure 4 Envivio TV Lounge with interactive shopping cart and video.

Figure SEQ Figure \* ARABIC 5 Dilbert 3D animation (France Telecom) 120KB+5kb/s (anim) + 48kb/s(AAC)

Figure 6 2D Cartoon (ENST)

References

[1] ISO/IEC 14496-11, Coding of audio-visual objects, Part 11: Scene description and Application engine (BIFS, XMT, MPEG-J)

[2] ISO/IEC 14496-20, Coding of audio-visual objects, Part 20: Lightweight Scene Representation (Laser)

Audio BIFS

MPEG doc#: N8641

Date: October 2006

Authors: Jürgen Schmidt and Johannes Boehm (THOMSON)

Introduction

The MPEG-4 standard provides a powerful scene description language for 2D and 3D scenes and the whole infrastructure for the transmission and state-of the-art (de)coding of audiovisual content. Key to this ability is the scene description language BIFS (Binary Information For Scenes) [1]. BIFS scene description is based on the tree concept that is similarly used in the VRML standard [2]. All functional blocks of a scene can be visualized as nodes of a tree and the structure of the tree reflects the interdependency of the functional blocks.

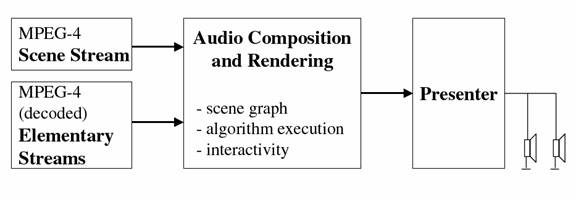

Figure 1: Simplified MPEG-4 audio player diagram

Scene information is transmitted in a composite data stream that consists of audio data in elementary streams and structural information in the scene stream, binary encoded in BIFS. Information in the scene stream includes the tree information as well as the description of the nodes themselves. The number of elementary audio streams is application dependent and can vary over time. Different audio coding algorithms exist for coding the elementary streams, e.g. MPEG-4 AAC.

Scene information and elementary streams are combined and processed in the composition unit or compositor. This unit receives and decodes the scene stream and is responsible for handling the nodes and the scene graph (tree) and their connections with the decoded elementary streams. The audio nodes themselves are responsible for any signal processing (rendering), initiated by the renderer. The output of the renderer is fed to the presenter, who in turn is responsible for mapping the audio signals to the available loudspeaker array. The principle is shown in Figure 1.

Audio Bifs

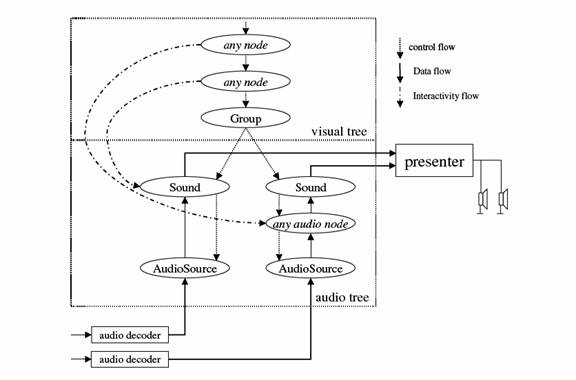

A simplified view of an audiovisual scene is given in Figure 2, showing control flow (scene control, field access), data flow (audio) and interactivity flow. The tree is logically divided into a visual part and an audio part [3]. The visual part contains all visual or interactive nodes. The nodes in the audio tree are responsible for the handling of audio content. The scene stream is decoded by the BIFS decoder (not shown) that instantiates the nodes and fills their property fields with properties arriving with the control flow.

Figure 2: Simplified scene graph diagram for a MPEG-4 scene

The audio nodes of the audio sub-tree determine the processing of the audio signals. Each node can be connected with any other node in the tree; crossings or multiple connections are allowed. The top node of the audio sub-tree is always a sound node. There exist different types of sound nodes, for example the Sound2 or the Sound2D node. The top node interfaces to the presenter and can have only one child. The bottom node is always an AudioSource node, or for backward compatibility an AudioClip node, whose usage should be avoided in new applications. The AudioSource node interfaces always to the decoded elementary-stream data.

Audio Bifs version 3

Audio Bifs version 3 adds new functionalities to Audio Bifs. Here is a brief exemplary overview:

Audio Bifs

- AudioMix for mixing different channels with each other, determined by a MxN matrix, where M determines the number of input channels of all connected children to that node and N is the number of output channels of that node.

- AudioSwitch for the selectively switching input to output channels of that node, similar to the AudioMixnode.

- AudioBuffer for storage and replay of audio data, now is replaces by AdvancedAudioBuffer

- AudioFX for audio effects. This node uses "Structured Audio" [4, 5] to describe the effects and requires a Structured Audio compiler or interpreter for implementation.

Advanced Audio Bifs (version 2, AABIFS)

- DirectiveSound

- AcousticScene

- AcousticMaterial.

The DirectiveSound node is a top node similar to the Sound or Sound2D node with extended properties for the spatial presentation of sound sources with the support of the AcousticScene and AcousticMaterial nodes. Details can be found in [6, 7].

Audio Bifs version 3

- AudioChannelConfig accepts one or several channels of input audio, and describes how to turn these channels of input audio into one or more channels of output

- Transform3DAudio enables 3D audio nodes also to be used in 2D scenes.

- WideSound is used to attach sound to a scene, thereby giving it spatial qualities with a determinable widening for not phase related signals from its descendant audio nodes and relating it to the visual content of the scene.

- SurroundingSound is used to attach sound to a scene. This causes spatial qualities and makes it related tot he visual content of the scene. This includes multi-channel signals that cannot be spatially transformed with the other sound nodes due to their restrictions to the specification of the phaseGroup field and the spatialize field.

- AdvancedAudioBuffer fixes, extends and replaces AudioBuffer

- AudioFXProto provides an implementation of a tailored subset of functionality available through the AudioFX node. The AudioFX node normally requires a Structured Audio implementation ( i. e. high computational power). These standard audio effects are identified by means of predefined values of the protoName field [1].

A more comprehensive review of this Audio Bifs version 3 review can be found in [8].

Target applications

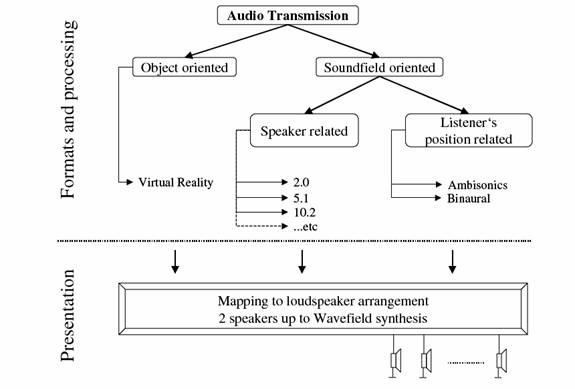

Target applications range from multimedia terminals to gaming applications. The technology of Audio Bifs composition and rendering allows for sound replay of all formats. These formats vary from the well-known 2-channel stereo format to multi-channel formats with up to 16 channels. They also cover Ambisonics or the binaural transport format and object oriented virtual reality formats as depicted in Figure 3.

Figure 3: Abstract view of an audio system with various audio formats

References

[1] ISO/IEC 14496-11: 2005, Information technology , Coding of audio-visual objects, Part 11: Scene description and application engine

[2] ISO/IEC 14772-1:1997 The Virtual Reality Modeling Language (VRML97) 1997, www.web3d.org/specifications/VRML97/

[3] Scheirer, E. D.; Väänänen, R.; Houpaniemi, V:AudioBIFS: Describing audio scenes with the MPEG-4 multimedia standard. IEEE Transactions on Multimedia, vol. 1, no. 3, pp 237 - 250, September 1999.

[4] Scheirer, Eric D.; Vercoe, Barry L.: "SAOL: The MPEG-4 Structured Audio Orchestra Language", Computer Music Journal, 23:2, pp. 31–51, 1999

[5] Vercoe, B. L.; Gardner, B. L.; Scheirer, E. D.: "Structured Audio: Creation, transmission, and rendering of parametric sound representations," Proc. IEEE vol. 86 (1998), no. 5, pp 922 – 940

[6] Dantele, A.; Reiter, U.; Schuldt, M.; Drumm, H.; Baum, O.: Implementation of MPEG-4 Audio Nodes in an Interactive Virtual 3D Environment. 114th AES Convention, Amsterdam, March 2003, preprint No. 5820

[7] Väänänen, Riitta: User Interaction and Authoring of 3D Sound Scenes in the Carrouso EU project. 114th AES Convention, Amsterdam, March 2003, preprint No. 5764

[8] Jürgen Schmidt and Ernst F. Schröder: New and Advanced Features for Audio Presentation in the MPEG-4 Standard. 116th AES Convention, Berlin, May 2004, preprint No 6058

BIFS ExtendedCore2D profile

MPEG doc#: N11958

Date: March 2011

Author: Cyril Concolato (Telecom ParisTech)

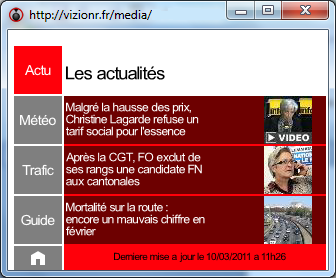

ISO/IEC 14496-11:2005/Amd.7:2010 is an amendment to the MPEG-4 BIFS standard which defines a new profile, called ExtendedCore2D. This amendment is intended to improve services such as T-DMB mobile television as deployed in South Korea or Digital Radio services as shown in Figure 1. The goal of this profile is to enable richer services with reduced bandwidth requirements. It is based on the existing Core2D profile, extends it with existing tools from the MPEG-4 BIFS standard not yet included in the Core2D profile, and with newly defined tools.

<p style="text-align:center; and to fill or stroke objects with gradients using the LinearGradient or RadialGradient nodes. Using these nodes will save bandwidth as some raster images can be replaced by more compact binary vector graphics representations. Finally, the visual quality of the new services can now remain intact when a scene designed for a smartphone screen is displayed on a larger tablet screen. An example of Digital Radio Service on tablet is shown in Figure 2.</p> <p align=center style=" text-align:center'="" align="center">

Figure 1 - Example of BIFS Digital Radio Service – Usage of vector graphics

(courtesy of VizionR and Fun Radio)

New and richer services defined with the ExtendedCore2D profile also need more compact and more efficient tools for scene graph management including layout description. For better scene management, the new profile includes the PROTO tool, to help reduce scene complexity and improve the coding efficiency. In term of layout, the new profile adds the ability to position objects, including text and vector graphics, in paragraphs with the Layout node. It also adds the possibility to transform, reuse, view and animate parts of the layout efficiently to achieve compact and appealing animations with the TransformMatrix2D, CompositeTexture2D and Viewport nodes.

The BIFS amendment also defines several new tools to enrich services. Some of them target a reduction of the bandwidth required by BIFS services. In particular, the CacheTexture node enables the carriage of compressed images within the BIFS stream, removing the use and signaling of some elementary streams dedicated for raster image delivery. The bandwidth gain can be important when the service contains many images, as shown in Figure 3. Some new tools provide new features for service authors. The EnvironmentTest node enables the design of services that adapt to viewing conditions, e.g. to the screen size, to the presence of keypad or to the viewing in cars. The KeyNavigator node facilitates the design of the navigation within rich scenes. With the Storage node, services can be designed to be persistent across activations and deactivations, offering the user a restoration of its settings. Finally, in T-DMB environments, it may be interesting to reuse, within the BIFS service, data carried outside the BIFS streams, such as Electronic Program Guides. For this purpose, a new type of BIFS update, called ReplaceToExternalData, is defined.

Figure 2 - Example of BIFS Digital Radio Service – News and non-synchronized screen with multiple images (courtesy of VizionR)

New and richer services defined with the ExtendedCore2D profile also need more compact and more efficient tools for scene graph management including layout description. For better scene management, the new profile includes the PROTO tool, to help reduce scene complexity and improve the coding efficiency. In term of layout, the new profile adds the ability to position objects, including text and vector graphics, in paragraphs with the Layout node. It also adds the possibility to transform, reuse, view and animate parts of the layout efficiently to achieve compact and appealing animations with the TransformMatrix2D, CompositeTexture2D and Viewport nodes.

The BIFS amendment also defines several new tools to enrich services. Some of them target a reduction of the bandwidth required by BIFS services. In particular, the CacheTexture node enables the carriage of compressed images within the BIFS stream, removing the use and signaling of some elementary streams dedicated for raster image delivery. The bandwidth gain can be important when the service contains many images, as shown in REF _Ref287611529 \h Figure 3. Some new tools provide new features for service authors. The EnvironmentTest node enables the design of services that adapt to viewing conditions, e.g. to the screen size, to the presence of keypad or to the viewing in cars. The KeyNavigator node facilitates the design of the navigation within rich scenes. With the Storage node, services can be designed to be persistent across activations and deactivations, offering the user a restoration of its settings. Finally, in T-DMB environments, it may be interesting to reuse, within the BIFS service, data carried outside the BIFS streams, such as Electronic Program Guides. For this purpose, a new type of BIFS update, called ReplaceToExternalData, is defined.

Figure 3 - Example of BIFS Digital Radio Service – News and non-synchronized screen with multiple images (courtesy of VizionR)

[1] Standing for BInary Format for Scene, BIFS

[2] inherited from VRML