MPEG-J Extension for rendering

MPEG doc#: N7410

Date: July 2005

Author: Vishy Swaminathan (Sun Microsystems Inc.), Mikaël Bourges-Sévenier (Mindego Inc.)

Introduction

Imagine this: The same movie content uses high quality five-channel surround sound audio or low quality mono audio depending on the complexity and capability of the client device. Similarly, the interactive menu presentation on the movie disc is adapted depending on the screen real estate and computation capability of the client device. An educational video is delivered to your client device. Once you complete each chapter, a quiz is administered. The difficulty level varies based on user responses. All of this being delivered as part of the same media stream. Is this possible? Yes! This IS possible using MPEG-J (MPEG-4 Java) which was originally defined as part of the MPEG-4 Systems Standard [1] part of the MPEG-4 standard which lets content creators embed simple or complex algorithmic control along with audio and video streams. This signals a paradigm shift that makes "media content" not just binary data that carries compressed digital audio or video but also “intelligent” computer programs - in a limited sense. These algorithms are implemented in the Java (TM) programming language and the platform independent executable byte code is streamed just like the audio and video streams.

MPEG-4, generally speaking, defines a compressed mechanism for audio and visual (not just video) objects. The systems part of the MPEG-4 specifies how these objects are decoded from the bit stream and presented synchronously with other objects. This is called the presentation engine in MPEG-4. MPEG-4 also defines a Java application engine that specifies how applications are received, decoded, and executed at the client terminal. This part of the MPEG-4 specification is called MPEG-J.

MPEG-J defines a set of Java Application Programming Interfaces (APIs) to access and control the underlying MPEG-4 terminal that plays an MPEG-4 audio-visual session. An MPEG-J application (MPEGlet) which uses these APIs can be embedded in the content as an MPEG-J elementary stream similar to audio and video streams. A delivery mechanism is defined in MPEG-J to carry the Java byte code and Java objects that make an MPEG-J application. Complex algorithmic control can be used in such an application to include behavior based on time-varying terminal conditions. The lifecycle and scope of an MPEG-J application is defined in the standard along with a strict security model. The security model protects the MPEG-4 terminal from malicious applications. All of the above constitute the MPEG-J execution environment.

Using the MPEG-J APIs, the applications have programmatic access to the scene, network, and decoder resources. With MPEG-J support, clients can adapt to varying static or dynamic terminal conditions gracefully. Currently, most clients adapt to extreme resource conditions using some ad-hoc policy. Using MPEG-J one such policy can be enforced by the content-creator by embedding this as an MPEGlet along with the content. MPEG-J also offers enhanced interactivity with the clients and the users. The value added by MPEG-J is algorithmic response to user interactivity. Content creators can include MPEGlets to respond to user triggers. This can result in intelligent responses to user interactions. A subset of the original scene can be displayed based on client capabilities or user preferences.

MPEG-4 terminals vary from high quality entertainment/TV decoders to wireless and handheld devices. MPEG-J assists in realizing the dream of "create once, run anywhere". With MPEG-J the same content can be adapted to run on clients of varying computational capabilities. MPEG-J enables monitoring of network bandwidth (and packet losses) and helps in adapting to a wide range of dynamically varying network conditions. Some of the applications scenarios of MPEG-J include: adaptive Rich Media content for wireless devices, multipurpose multimedia services, multi-user 3D worlds.

MPEG-J Architecture

Figure 1 shows the building blocks in an MPEG-J-enabled MPEG-4 terminal. BIFS defines the binary format for the parametric description of the scene. This is used to build the scene and present it since BIFS describes the spatio-temporal relationships of the audiovisual objects in the scene (see Chapter 4). The part of the terminal that interprets BIFS and its related streams and composites and presents them is called the presentation engine. The execution engine decompresses the coded audiovisual streams. MPEG-J defines an application engine, which offers programmatic access and control to the presentation and execution Engines. Specifically, MPEG-J enables an application to access and modify the MPEG-4 scene and, to a limited extent, the network and decoder resources of the terminal. The MPEG-J APIs (the platform-supported Java APIs) together with the JVM, form the application engine that facilitates the execution of the delivered application. This application engine allows the content creators to include programs in their content that intelligently control the MPEG-4 terminal depending on client conditions.

As the MPEG-J programs (MPEGlets) are usually carried separately, they can be re-used with other audiovisual scenes. It is also possible to have these programs resident on the terminal, provided that they are written in a way that is independent of the scene and the elementary streams associated with it.

Figure 1 MPEG-J application engine.

The architecture of an MPEG-J enabled MPEG-4 player is shown in Figure 2. The parametric presentation engine of MPEG-4 Systems, Version 1, forms the lower half of Figure 2. The MPEG-J application engine (upper half of Figure 2) is used to control the presentation engine through well-defined application execution and delivery mechanisms. The MPEG-J application uses the MPEG-J APIs for this control.

Figure 2 MPEG-J-enabled MPEG-4 player architecture.

Applications can be local to the terminal or carried in the MPEG-J stream and executed in the terminal. While the term MPEGlet is used to refer to a streamed MPEG-J program, a local program is generically called MPEG-J application. MPEGlets are required to implement the (Java applet like) MPEGlet interface defined in the MPEG-J specification. The MPEGlets and all other associated MPEG-J classes and objects are carried in an MPEG-J elementary stream. The received MPEGlet is loaded by the Class Loader (shown in Figure 2) and started at time instants prescribed in the time stamps of the MPEG-J stream. The MPEG-J application uses the Scene, Network, and the Resource managers to control the scene, network, decoder, and terminal resources.

Target applications

Some MPEG-J application scenarios are presented in the following sections. Some example applications scenarios are adaptive rich media content for wireless devices, enhanced interactive electronic program guide (EPG), enriched interactive digital TV, content personalization, etc.

Since MPEG-J uses Java, a rich programming language, the applications possible are limited only by the ingenuity of the content creator. MPEG-J is a major step towards delivering intelligent and interactive content. MPEG-J puts in place all the tools necessary for content creators to start producing even more interesting MPEG-4 content.

References

[1] ISO/IEC 14496-11, Coding of audio-visual objects, Part 11: Scene description and Application engine (BIFS, XMT, MPEG-J)

[2] ISO/IEC 14496-21, Coding of audio-visual objects, Part 21: MPEG-J Graphics Framework extension (GFX)

[3] James Gosling, Bill Joy and Guy Steele, The Java Language Specification. Addison-Wesley, September 1996

MPEG-J Graphical Framework eXtension (GFX)

MPEG doc#: N7409

Date: July 2005

Author: Mikaël Bourges-Sévenier (Mindego Inc.), Vishy Swaminathan (Sun Microsystems Inc.), Mark Callow (HI Corp.), Itaru Kaneko (Tokyo Polytechnic Univ.)

Introduction

MPEG-J Graphical Framework eXtension (GFX) [3] is a fully programmatic solution for creation of custom interactive multimedia applications. In such applications, synthetic and natural media assets are composed in real-time according to a programmed logic expressed in a computer language. Java [5] has been chosen as it is a secure, platform independent and efficient programming language ubiquitous in embedded devices. Therefore, a GFX application consists of a collection of Java classes and media assets received or downloaded by a terminal using the MPEG-J application engine [1]. GFX applications implement a Java interface called MPEGlet, which is used by a terminal to bootstrap them. In this document, the terms ‘MPEGlet’ and ‘application’ are used interchangeably.

Background: application interoperability

In the last 10 years, many standards have tackled the difficult problem of application interoperability. Two approaches were followed:

- Interoperability using file formats to communicate between heterogeneous systems (client/server protocols), to abstract some processing operations (audio/video formats) and rendering operations (e.g. HTML, SVG, BIFS [1], Laser [2])

- Interoperability using a programming or scripting language such as Java, ECMAScript, Python, Perl, PHP and media assets with no logic embedded but possibly metadata to assist media processing.

With the first approach, animation consists of dynamic modifications of the syntax defined in a format. For example, various visual effects can be produced in a web page by modifying HTML tags. Similarly, with formats such as BIFS and Laser, 2D/3D audio-visual effects are produced using the ECMAScript or Java languages. Clearly, the range of applications is limited by the format and since the format is the central element of the application, it is not possible to optimize data and control (logic) flow between application and hardware. As the format evolves with more features or when the content becomes more complex, it becomes an important bottleneck.

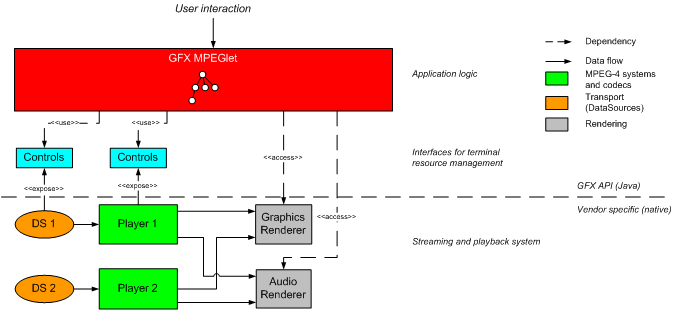

With the second approach, there is no application-level format but logic that composes in real-time various media assets by issuing commands to low-level (hardware) terminal resources; this is the GFX approach. Figure 1 depicts a high-level overview of a GFX-enabled terminal.

GFX design

An application is streamed or downloaded to a terminal. The application manager starts the class that implements the MPEGlet interface and manages its lifecycle. Once started, an application can retrieve composition data from various sources and send commands to renderers to produce audio-visual effects according to its logic and user interaction.

Figure SEQ Figure \* ARABIC 1 – GFX overview.

At the macroscopic level, one could say that MPEG-J GFX application engine (execution environment and APIs) is an alternative approach to using application-level formats such as BIFS and Laser. It uses the Java programming language to provide low-level access to terminal resources. This has the benefits to enable application developers to create their own audio-visual effects, their own scene management and representation optimized for the needs of their applications:

- DataSources are abstractions for media protocol-handlers. They hide how the data is read from a source (file, streams, etc.),

- Players control the playback of time based media data and provide means to synchronize with other players,

- Renderers perform rendering operations onto terminal displays and speakers,

- Controls enables control over media processing functions

GFX is network agnostic, media agnostic, and rendering engine agnostic: the terminal provides support for network protocols, media encodings, and rendering engines an application can use. For graphics, GFX specification recommends usage of OpenGL ES [4] and M3G [5] rendering engines. For media and protocols, the types depend on the profiles supported by the terminal.

GFX is designed for devices with limited resources so the Java layer is used mostly for applications’ logic and hardware optimized native layer for heavy media processing. GFX is built upon well-known deployed industry practices and hence favors reuse of existing APIs whenever feasible [5][6].

Finally, it is important to note that GFX is fully extensible: protocols can be added over time, codecs and renderers as well, without recompiling or modifying the API or applications. GFX design favors vendor specific implementations of a standard API. As a result, conformance points concern the interfaces with native layer but not the behavior of layer. For example, vendors may support MPEG codecs and expose standard controls and they may also provide (published) proprietary controls for applications to fine tune performance of the system. Through controls, virtually any MPEG tool used by a specific content can be exposed to an application.

Target applications

Entertainment, games, e-learning, training, simulation, e-commerce, etc.

References

[1] ISO/IEC 14496-11, Coding of audio-visual objects, Part 11: Scene description and Application engine (BIFS, XMT, MPEG-J)

[2] ISO/IEC 14496-20, Coding of audio-visual objects, Part 20: Lightweight Scene Representation (Laser)

[3] ISO/IEC 14496-21, Coding of audio-visual objects, Part 21: MPEG-J Graphics Framework extension (GFX)

[4] Khronos Group, OpenGL ES 1.1. http://www.khronos.org

[5] Community Process, Mobile 3D Graphics (M3G) 1.1, June 22, 2005. http://jcp.org/aboutJava/communityprocess/final/jsr184/index.html

[6] Community Process, Mobile Media API, June, 2003. http://jcp.org/aboutJava/communityprocess/final/jsr135/index.html

[7] James Gosling, Bill Joy and Guy Steele, The Java Language Specification. Addison-Wesley, September 1996