ISO Base Media File Format (aka MPEG File Format)

MPEG doc#: N11966

Date: March 2011

Author: David Singer (Apple)

Introduction

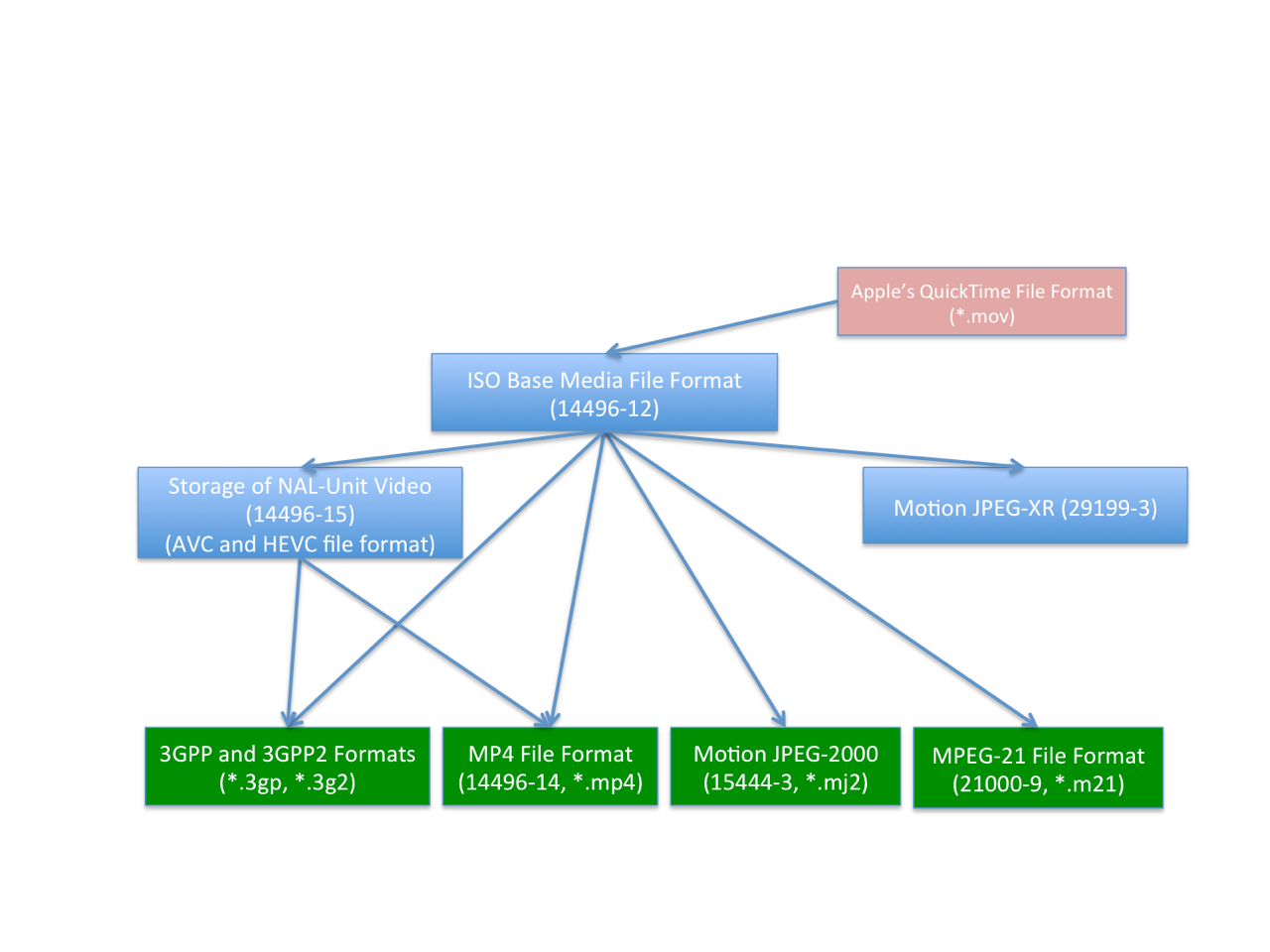

Within the ISO/IEC 14496 MPEG-4 standard there are several parts that define file formats for the storage of time-based media (such as audio, video etc.). They are all based and derived from the ISO Base Media File Format [1], which is a structural, media-independent definition that is also published as part of the JPEG 2000 family of standards.

There are also related file formats that use the structural definition of a box-structured file as defined in the ISO Base Media File Format, but do not use the definitions for time-based media. Other files using this structure include the standard file formats for JPEG 2000 images, such as JP2 [2].

A diagrammatic overview of the relationship between the various file formats with the ISO Base Media File Format is shown in Figure 1.

Target applications

The ISO Base Media File Format contains structural and media data information principally for timed presentations of media data such as audio, video, etc. There is also support for un-timed data, such as meta-data. By structuring files in different ways the same base specification can be used for files for

- capture;

- exchange and download, including incremental download and play;

- local playback;

- editing, composition, and lay-up;

- streaming from streaming servers, and capturing streams to files.

More specialized uses include the use for the storage of a partial or complete MPEG-4 scene and associated object descriptions. This general structure has been adopted not only for the MP4 file format, but a number of other standards bodies, trade associations, and companies.

Decoupled Structures

The files have a logical structure, a time structure, and a physical structure, and these structures are not required to be coupled. The logical structure of the file is of a movie that in turn contains a set of time-parallel tracks. The time structure of the file is that the tracks contain sequences of samples in time, and those sequences are mapped into the timeline of the overall movie by optional edit lists.

Physical Structure

The family of the storage file formats is based in the concept of box-structured files. A box-structured file consists of a series of boxes (sometimes called atoms), which have a size and a type. The types are 32-bit values and usually chosen to be four printable characters, for ease of inspection and editing. Box structured files are used in a number of applications, and it is possible to form ‘multi-purpose’ files which contain the boxes required by more than one specification. Examples include not only the ISO Base File Format family described here, but also the JPEG 2000 file format family, which for the most part is a still-image file format. There is provision for using extension boxes with a Universal Unique Identifier type (UUID) [4], and specification text is provided on how to convert all box types into UUID’s.

All box-structured files start with a file-type box (possibly after a box-structured signature) that defines the best use of the file, and the specifications to which the file complies.

The physical structure of the file separates the data needed for logical, time, and structural de-composition, from the media data samples themselves. This structural information is concentrated in a movie box, possibly extended in time by movie fragment boxes. The movie box documents the logical and timing relationships of the samples, and also contains pointers to where they are located. Those pointers may be into the same file or another one, referenced by a URL.

Un-timed data may be contained in a metadata box, at the file level, or attached to the movie box or one of the streams of timed data, called tracks, within the movie.

Logical Structure

Each media stream is contained in a track specialized for that media type (audio, video etc.), and is further parameterized by a sample entry. The sample entry contains the ‘name’ of the exact media type (i.e., the type of the decoder needed to decode the stream) and any parameterization of that decoder needed. The name also takes the form of a four-character code. There are defined sample entry formats not only for MPEG-4 media, but also for the media types used by other organizations using this file format family. They are registered at the MP4 registration authority [3].

Tracks (or sub tracks) may be identified as alternatives to each other, and there is support for declarations to identify what aspect of the track can be used to determine which alternative to present, in the form of track selection data.

Time Structure

Each track is a sequence of timed samples; each sample has a decoding time, and may also have a composition (display) time offset. Edit lists may be used to over-ride the implicit direct mapping of the media timeline, into the timeline of the overall movie.

Sometimes the samples within a track have different characteristics or need to be specially identified. One of the most common and important characteristic is the synchronization point (often a video I-frame). These points are identified by a special table in each track. More generally, the nature of dependencies between track samples can also be documented. Finally, there is a concept of named, parameterized sample groups. Each sample in a track may be associated with a single group description of a given group type, and there may be many group types.

Metadata Support

Support for meta-data takes two forms. First, timed meta-data may be stored in an appropriate track, synchronized as desired with the media data it is describing.

Secondly, there is general support for non-timed collections of metadata items attached to the movie or to an individual track. The actual data of these items may be in the metadata box, elsewhere in the same file, in another file, or constructed from other items. In addition, these resources may be named, stored in extents, and may be protected. These metadata containers are used in the support for file-delivery streaming, to store both the ‘files’ that are to be streamed, and also support information such as reservoirs of pre-calculated forward error-correcting (FEC) codes.

The generalized meta-data structures may also be used at the file level, above or parallel with or in the absence of the movie box. In this case, the meta-data box is the primary entry into the presentation. This structure is used by other bodies are to wrap together other integration specifications (e.g. SMIL [5]) with the media integrated.

Protection Support

Protected streams (such as encrypted streams, or those controlled by a digital rights management (DRM) system) are also supported by the file format (e.g. streams encrypted for use in a digital rights management systems (DRM)). There is a general structure for protected streams, which documents the underlying format, and also documents the protection system applied and any parameters it needs.

This same protection support can be applied to the items in the metadata.

Streaming Support

There is support for both streams prepared for transmission by streaming servers, and also for streams recorded into files, in the form of hint tracks, server and reception.

When media is delivered over a streaming protocol it often must be transformed from the way it is represented in the file. The most obvious example of this is the way media is transmitted over the Real Time Protocol (RTP) [6]. In the file, for example, each frame of video is stored contiguously as a file-format sample. In RTP, packetization rules specific to the codec used, must be obeyed to place these frames in RTP packets.

A streaming server may calculate such packetization at run-time if it wishes. Hint tracks contain general instructions for streaming servers as to how to form packet streams, from media tracks, for a specific protocol. Because the form of these instructions is media-independent, servers do not have to be revised when new codecs are introduced. In addition, the encoding and editing software can be unaware of streaming servers. Once editing is finished on a file, then a piece of software called a hinter may be used that adds hint tracks to the file, before placing it on a streaming server.

There are defined formats for server and reception hint tracks for RTP and MPEG-2 Transport, and there is a server hint track format for streaming file delivery (FD) hint tracks, that can be used to support protocols such as FLUTE [].

File Identification

The file-type box identifies specifications with which the file complies by using brands. The presence of a brand in this box indicates both a claim and a permission; a claim by the file writer that the file complies with the specification, and a permission for a reader, possibly implementing only that specification, to read and interpret the file. The major brand in a file indicates its best usage, and normally matches its file extension and MIME type.

The ISO base media file format specification defines a number of brands, covering different required feature sets, which may not be used as major brands, as this specification is always specialized in use.

The formal registration authorities (e.g. the MP4 registration authority [3] for brands, or the Internet Assigned Numbers Authority [7] for MIME types) and the appropriate specifications should be consulted for definitive information.

Registration Authority

There is a registration authority which registers and documents the four-character-code code-points used in this file-format family, as well as some other code-points related to MPEG-4 systems. The database is publicly viewable and registration is free [3].

References

[1] ISO/IEC 14496-12, ISO Base Media File Format; technically identical to ISO/IEC 15444-12

[2] ISO/IEC 15444-1, JPEG 2000 Image Coding System

[3] The MP4 Registration Authority, http://www.mp4ra.org/

[4] ISO/IEC 9834-8:2004 Information Technology, "Procedures for the operation of OSI Registration registration of Universally Unique Identifiers (UUIDs) and their use as ASN.1 Object Identifier components" ITU-T Rec. X.667, 2004.

[5] SMIL: Synchronized Multimedia Integration Language; World-Wide Web Consortium (W3C) http://www.w3.org/TR/SMIL2/

[6] RTP: A Transport Protocol for Real-Time Applications; IETF RFC 3550, http://www.ietf.org/rfc/rfc3550.txt

[7] The Internet Assigned Numbers Authority http://www.iana.org/

[DS1]needs editing to say AVC/SVC/MVC file format, ideally