By Philip Merril - January 2018

The pursuit of reconfigurability in MPEG specification allows a bottom-up rethinking of how digital standards can be represented. The reconfigurable work that has been done for MPEG-4 Simple and AVC Constrained Baseline Video Profiles has been extended to HEVC and 3D graphics and could someday encompass audio and systems profiles as well. Modules called Functional Units are specified in terms of the computation they perform by the standard RVC-CAL language, and are connected composing a network allowing drag-and-drop visual programs to support new configurations, their simulation, and their implementation either in software or in hardware by using direct high level synthesis tools. Unlike long, linear, and sequential specifications more common to MPEG, these Functional Units can be specified in a way that remains much closer to their underlying algorithms. Given initial instructions on how to parse the bitstream of content data, using Bitstream Syntax Description language, dataflow networks configured from these predesigned pieces could potentially process, decode and render any kind of digital media. But the work has started with video and 3D graphics.

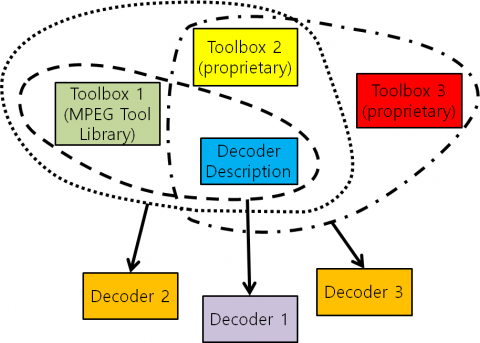

As one would expect MPEG to be aware, there are a lot of different ways that digital video can be coded, decoded and optimized for encoding. So many in fact that Reconfigurable Video Coding (RVC) set up a framework so that a single Abstract Decoder Model can be instantiated to decode, theoretically, any piece of digital media. The idea is that the structure of the bits and the processing required, what steps in what order, can all be laid out so that the Decoder Description information tells a person or machine how to handle specific video or other media. The RVC framework is primarily laid out in MPEG-B Codec Configuration Representation. This draws from a Video Tool Library (VTL) in MPEG-C that supports MPEG-4 Simple and AVC Constrained Baseline Profiles based on modular Functional Units that can be reconfigurably managed so as to execute the appropriate algorithms in order for each Profile.

In principle the RVC model can be used for any media codec. For instance RVC has been used for the 3D graphics codecs standardized by MPEG in Part 16 of MPEG-4. A GTL (Graphics Tool Library) was developed to cover the signal processing specific to 3D data and the same approach of functional units as for video was used.

The software approach of providing processing instructions before providing a bitstream's content data can be applied to any digital media and could have far-reaching implications because it is replicable. It is certainly practical given the regular invention of new and proprietary video codecs because it can ultimately keep old digital media from becoming unusable (keeping it playable as is, versus transcoding/conversion). It also operationalizes the standardization process for what MPEG already accomplished, providing another view where tools are put into action instead of being spelled out in all the required detail, which is MPEG's common approach.

Codec Configuration Representation invites visualization of many different codecs that are possible. For some, seeing the tools in action helps reinforce learning how they work, so this approach seems beneficial to advanced digital media coding generally, as a field of study. It was first conceptualized in response to a request to standardize a national codec, and can be applied both broadly and very specifically. Reconfigurable Media Coding (RMC) provides a framework for Abstract Decoder Model instantiatiation that could decode anything set up to fit within the framework.

The idea in some ways is that you could draw the path on a cocktail napkin, and the collection of Functional Units in the VTL can then be configured accordingly. The RVC-CAL and network modeling languages have visual programming support and implementations are available through open source development platforms. At the end of the configuration, a visual flowchart has built-in behavioral paths for the data so that dependencies are either spelled out as token behavior or else are encapsulated within a Functional Unit. The FUs all have input and output connections with each other, and this enables the data entities to be moved around as tokens.

Within the Abstract Decoder Model, a complete Decoder Description must reference the complete network of I/O connections, which constitute the behavioral model of the configured decoder. This level of flow management simplifies the potentially competitive creation of implementations, since vendors could design their own sense of the ideal into software that would handle the media data as specified, through its connections between Functional Units.

The Bitstream Syntax Description language followed by the VTL's FUs specify the initial parsing to expect and what to do thereafter. The decoder can be simulated or implemented, either in software or hardware. From beginning to end we have a configured network where processes are controlled by the structure of the data and the steps, keeping the dataflow both visible and rational. There is no strict timetable for extending this method to MPEG audio or systems, but the reconfigurability approach has a refreshing next-gen look-and-feel that can apply well to proliferating platforms in the wild and expanding media types provisioning them.

Go to the MPEG news page