by Philip Merrill - June 2017

Multimedia Linking Application Format (MLAF) has standardized a "bridget." This links bridge points between digital media resources potentially including time-period segments. Bridgets can be shared interoperably between platforms and applications. Unlike Web links born of desktop times, these media links are designed to support photos, video, social, search, as well as platform options such as second screen or mixed reality.

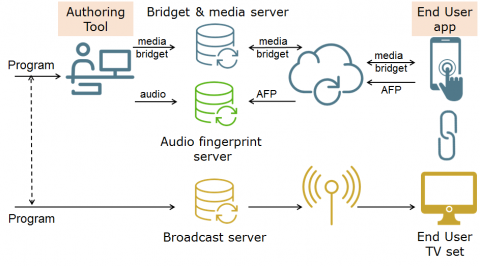

In 2013 European Commission grants supported several digital media projects including enhanced broadcast and second screen efforts that are culminating in bridget's near MPEG standardization as MLAF. Improving underlying formats for second screen viewing is a long-term goal for broadcasters because they have people who are already paying attention while doing other things, such as watching TV while engaging in social media on a smartphone or tablet. Delivering personalized interactive content in relation with the video shown on TV reaches out to these viewers who are already there, paying attention to the show while mutitasking. This early work appears online at ict-bridget.eu and represents the joint collaboration of Fraunhofer, Huawei, Rai – Radiotelevisione italiana, Telecom Italia; academic groups at Technical University of Madrid, The Institut Mines-Télécom, University of Surrey; CEDEO, and Visual Atoms. The scenarios considered include pre-made broadcast enhancements, contemporaneously authored travel journaling, and search on a real-time breaking news story. Ending late 2016, the program's results included an agreed format passed off to MPEG with casual and pro-oriented authoring tools, player, testing with CDVS image archive search and mixed reality presentation, and proof-of-concept demos put online by CEDEO, as well as the WimBridge and TVBridge authoring tools. These for example present clickable areas with images or video links such that clicking on an image link pauses the video, overlays a larger image, and then clicking outside the enlarged image resumes the video, whereas for video links the content jumps to the time period indicated in the link's metadata.

On the MPEG side an independent requirements cycle was conducted and MLAF like other MPEG-A parts took advantage of structures from -7 and -21, notably MPEG-7 Audiovisual Description Profile with its temporal decomposition syntax and MPEG-21 Digital Item Declaration, a metadata container supporting pointers and hooks to identify resources, their locations, suggestions for use, and any other critical or supplemental information stored as metadata. MLAF bridgets are also designed for compatibility with the EBU Core Metadata Set that benefits from the Dublin Core, as well as MPEG-A Augmented Reality Application Format (ARAF) supporting mixed reality display of bridgets in scenes and helping to enable CDVS image archive search.

To start with a very simple example of the "Why not just … ?" variety, one might ask why not just use something like an HTML link to a resource's URL and state its MIME type for assistance. One thing that stands out from this simple example is the three main areas of standardization that have gone into it. We have HTML's simple anchor format, the URI system as a whole, and the MIME resource type list. So MLAF link containers can be considered a further step in a more extensive process of making connections between content resources in our digital world. The web did not develop to be friendly to video in general. Hyperlinks were first based on making textual references clickable, connecting article citations to articles (as bridge points), followed by the later addition of imagery displayed alongside text. Media content such as broadcasts and the moving image generally all present dense and passing information which cannot be statically highlighted so easily as text or rectangles holding images which don't move. Unlike our now-conventional hyperlinks, MLAF has the granular potential to link either to or from any individual video frame or audio sample.

This world is remarkably multi-platform with social collaboration and sharing. As its range continues to expand with mobile VR/AR and new social apps, people will want to increasingly have one link to a media item at their fingertips, accessible, authorable, interoperable. As mentioned above, the system of URI is already convenient because it has been made to be so, by design, and so the idea is to be able to link to a resource in just such a way that any platform or application can interoperably parse metadata relevant to that resource's use.

Following the professional production workflow for bridget creation, a broadcaster can offer media link enhancements, empowering audience members to set some preferences such as whether to watch bridgets on a second screen or on the same screen. Because of CDVS image matching and CDVA in the pipeline for video, one can imagine a multitude of location, actor, and even costume or scene element/prop matches. A professional author will use taste to curate a select set of links to enhance the main program.

Social media workflow presents a completely casual extreme of routine postings while one is on the go. A tourism workflow could distribute real-time bridgets to friends, receive replies, create a running archive, and integrate results of image searches as well as annotations from mixed reality location databases. Because the interoperable MLAF structure is made to maximize cross-platform potential, whether smartphone plus tablet, desktop only, or mixed reality, our tourist who might also be an on-the-go shopper should be able to research garments and designers while friends provide advice or suggestions. Everyone has their own devices, but thousands of possibilities can be presented using the same bridget structure.

Automatically generated bridgets can be set up with any number of algorithmically enabled workflows. Of course machines are capable of producing more than the amount needed, so the user's ability to receive and perceive bridgets involves wider considerations than how to build the bridgets themselves. As mentioned above, Augmented Reality Application Format (ARAF) more fully exploits the extensive Scene work performed years ago in MPEG-4 as a framework MPEG can now enhance with more recent features such as bridgets positioned in 3D space or displayed with optional style configurations. Receiving bridget information within an ARAF mixed-reality scene supports a new variety of use cases. A city tour can now play docent while a pedestrian strolls downtown, and relevant links between media can be automatically generated. Investment traders do monitor subscription alert services that deliver near real-time financial data to assist trades, and the positioning of instrument data types such as stocks, bonds or relative currency values. Whether such information appears in bridgets or not is incidental to the trader's decisions (and to ARAF's internal management of hyperlinks as well) but keeping stocks and bonds segregated in the visual environment could be valuable, e.g., upper-left versus upper-right. Geospatial engineers map many licensed feeds of visual and quantitative data within 2D digital maps, and ARAF will support expanding GIS visibility to 3D, with or without bridgets. So automated bridget workflows are a promising area for innovative development and many kinds of bridget intermediary services might evolve.

While workflows make the most of MLAF's power and flexibility, various speculation or efforts are in the works to more fully develop a multimedia hyperlinking environment. The invitation to imagine what this should look like invokes nearly a dozen other MPEG research areas, including MPEG-I. As reviewed above, the Scene work for VR/AR mixed reality composites virtual objects, like one display of one link in 3D, so MLAF bridgets should be able to crop up anywhere within a 3D environment. So while a virtual bridge could be composited, displaying bridgets in 3D, for example about actual bridges, whether a user could virtually walk on it or not requires permissions beyond the scope of MPEG.

Go to the MPEG news page